Many academics have strong incentives to influence policymaking, but may not know where to start. We searched systematically for, and synthesised, the ‘how to’ advice in the academic peer-reviewed and grey literatures. We condense this advice into eight main recommendations: (1) Do high quality research; (2) make your research relevant and readable; (3) understand policy processes; (4) be accessible to policymakers: engage routinely, flexible, and humbly; (5) decide if you want to be an issue advocate or honest broker; (6) build relationships (and ground rules) with policymakers; (7) be ‘entrepreneurial’ or find someone who is; and (8) reflect continuously: should you engage, do you want to, and is it working? This advice seems like common sense. However, it masks major inconsistencies, regarding different beliefs about the nature of the problem to be solved when using this advice. Furthermore, if not accompanied by critical analysis and insights from the peer-reviewed literature, it could provide misleading guidance for people new to this field.

Many academics have strong incentives to influence policymaking, as extrinsic motivation to show the ‘impact’ of their work to funding bodies, or intrinsic motivation to make a difference to policy. However, they may not know where to start (Evans and Cvitanovic, 2018). Although many academics have personal experience, or have attended impact training, there is a limited empirical evidence base to inform academics wishing to create impact. Although there is a significant amount of commentary about the processes and contexts affecting evidence use in policy and practice (Head, 2010; Whitty, 2015), the relative importance of different factors on achieving ‘impact’ has not been established (Haynes et al., 2011; Douglas, 2012; Wilkinson, 2017). Nor have common understandings of the concepts of ‘use’ or ‘impact’ themselves been developed. As pointed out by one of our reviewers, even empirical and conceptual papers often routinely fail to define or unpack these terms—with some exceptions (Weiss, 1979; Nutley et al., 2007; Parkhurst, 2017). Perhaps because of this theoretical paucity, there are few empirical evaluations of strategies to increase the uptake of evidence in policy and practice (Boaz et al., 2011), and those that exist tend not to offer advice for the individual academic. How then, should academics engage with policy?

There are substantial numbers of blogs, editorials, commentaries, which provide tips and suggestions for academics on how best to increase their impact, how to engage most effectively, or similar topics. We condense this advice into 8 main tips, to: produce high quality research, make it relevant, understand the policy processes in which you engage, be accessible to policymakers, decide if you want to offer policy advice, build networks, be ‘entrepreneurial’, and reflect on your activities.

Taken at face value, much of this advice is common sense, perhaps because it is inevitably bland and generic. When we interrogate it in more detail, we identify major inconsistencies in advice regarding: (a) what counts as good evidence, (b) how best to communicate it, (c) what policy engagement is for, (d) if engagement is to frame problems or simply measure them according to an existing frame, (e) how far to go to be useful and influential, (f) if you need and can produce ground rules or trust (g) what entrepreneurial means, and (h) how much choice researchers should have to engage in policymaking or not.

These inconsistencies reflect different beliefs about the nature of the problem to be solved when using this advice, which derive from unresolved debates about the nature and role of science and policy. We focus on three dilemmas that arise from engagement—for example, should you ‘co-produce’ research and policy and give policy recommendations?—and reflect on wider systemic issues, such as the causes of unequal rewards and punishments for engagement. Perhaps the biggest dilemma reflects the fact that engagement is a career choice, not an event: how far should you go to encourage the use of evidence in policy if you began your career as a researcher? These debates are rehearsed more fully and regularly in the peer-reviewed literature (Hammersley, 2013; de Leeuw et al., 2008; Fafard, 2015; Smith and Stewart, 2015; Smith and Stewart, 2017; Oliver and Faul, 2018), which have spawned narrative reviews of policy theory and systematic reviews of the literature on the ‘barriers and facilitators’ to the use of evidence in policy. For example, we know from policy studies that policymakers seek ways to act decisively, not produce more evidence until it speaks for itself; and, there is no simple way to link the supply of evidence to its demand in a policymaking system (see Cairney and Kwiatkowski, 2017). We draw on this literature to highlight inconsistencies and weaknesses in the advice offered to academics.

We assess how useful the ‘how to’ advice is for academics, to what extent the advice reflects the reality of policymaking and evidence use (based on our knowledge of the empirical and theoretical literatures, described more fully in Cairney and Oliver, 2018) and explore the implications of any mismatch between the two. We map and interrogate the ‘how to’ advice, by comparing it with the empirical and theoretical literature on creating impact, and on the policymaking context more broadly. We use these literatures to highlight key choices and tensions in engaging with policymakers, and signpost more useful, informed advice for academics on when, how, and if to engage with policymakers.

Systematic review is a method to synthesise diverse evidence types on a clear defined problem (Petticrew and Roberts, 2008). Although most commonly associated with statistical methods to aggregate effect sizes (more accurately called meta-analyses), systematic reviews can be conducted on any body of written evidence, including grey or unpublished literature (Tyndall, 2008). All systematic reviews take steps to be transparent about the decisions made, the methods used to identify relevant evidence, and how this was synthesised to be transparent, replicable and exhaustive (resources allowing) (Gough et al., 2012). Primarily they involve clearly defined searches, inclusion and exclusion processes, and a quality assessment/synthesis process.

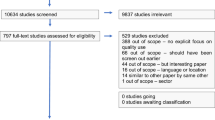

We searched three major electronic databases (Scopus, Web of Science, Google Scholar) and selected websites (e.g., ODI, Research Fortnight, Wonkhe) and journals (including Evidence and Policy, Policy and Politics, Research Policy), using a combination of terms. Terms such as evidence and impact were tested to search for articles explaining how to better ‘use’ evidence, or how to create policy ‘impact’. After testing, the search was conducted by combining the following terms, tailored to each database: ((evidence or science or scientist or researchers or impact), (help or advi* or tip* or "how to" or relevan*)) policy* OR practic* OR government* OR parliament*). We checked studies on full text where available and added them to a database for data-extraction. We conducted searches between June 30th and August 3rd 2018. We identified studies for data extraction when they covered these areas: Tips for researchers, tips for policymakers, types of useful research / characteristics of useful research, and other factors.

We included academic, policy and grey publications which offered advice to academics or policymakers on how to engage better with each other. We did not include: studies which explored the factors leading to evidence use, general commentaries on the roles of academics, or empirical analyses of the various initiatives, interventions, structures and roles of academics and researchers in policy (unless they offered primary data and tips on how to improve); book reviews; or, news reports. However, we use some of these publications to reflect more broadly on the historical changes to the academic-policy relationship.

We included 86 academic and non-academic publications in this review (see Table 1 for an overview). Although we found reports dating back to the 1950s on how governments and presidents (predominantly UK/US) do or do not use scientific advisors (Marshall, 1980; Bondi, 1982; Mayer, 1982; Lepkowski, 1984; Koshland Jr. et al., 1988; Sy, 1989; Krige, 1990; Srinivasan, 2000) and committees (Sapolsky, 1968; Wolfle, 1968; Editorial, 1972; Walsh, 1973; Nichols, 1988; Young and Jones, 1994; Lawler, 1997; Masood, 1999; Morgan et al., 2001; Oakley et al., 2003; Allen et al. 2012). The earliest publication included was from 1971 (Aurum, 1971). Thirty-four were published in the last two years, reflecting ever increasing interest in how academics can increase their impact on policy. Although some academic publications are included, we mainly found blogs, letters, and editorials, often in high-impact publications such as Cell, Science, Nature and the Lancet. Many were opinion pieces by people moving between policy officials and academic roles, or blogs by and for early career researchers on how to establish impactful careers.

Table 1 Search resultsThe advice is very consistent over the last 80 years; and between disciplines as diverse as gerontology, ecology, and economics. As noted in an earlier systematic review, previous studies have identified hundreds of factors which act as barriers to the uptake of evidence in policy (Oliver et al., 2014), albeit unsupported by empirical evidence. Many of the advisory pieces address these barriers, assuming rather than demonstrating that their simple advice will help ease the flow of evidence into policy. The pieces also often cite each other, even to the extent of using the exact phrasing. Therefore, the combination of previous academic reviews with our survey of ‘how to’ advice reinforces our sense of ‘saturation’, in which we have identified all of the most relevant advice (available in written form). In our synthesis, using thematic analysis, we condense these tips into 8 main themes. Then, we analyse these tips critically, with reference to wider discussions in the peer-reviewed literature.

Researchers are advised to conduct high-quality, robust research (Boyd, 2013; Whitty, 2015; Docquier, 2017; Eisenstein, 2017) and provide it in a way that is timely, policy relevant, and easy to understand, but not at the expense of accuracy (Havens, 1992; Norse, 2005; Simera et al., 2010; Bilotta et al., 2015; Kerr et al., 2015; Olander et al. 2017; POST, 2017). Specific research methods, metrics and/or models should be used (Aguinis et al. 2010), with systematic reviews/evidence synthesis considered particularly useful for policymakers (Lavis et al., 2003; Sutherland, 2013; Caird et al., 2015; Andermann et al., 2016; Donnelly et al., 2018; Topp et al., 2018), and often also randomised controlled trials, properly piloted and evaluated (Walley et al., 2018). Truly interdisciplinary research is required to identify new perspectives (Chapman et al., 2015; Marshall and Cvitanovic, 2017) and explore the “practical significance” of research for policy and practice (Aguinis et al. 2010). Academics must communicate scientific uncertainty and the strengths and weaknesses of a piece of research (Norse, 2005; Aguinis et al., 2010; Tyler, 2013; Game et al., 2015; Sutherland and Burgman, 2015), and be trained to “estimate probabilities of events, quantities or model parameters” (Sutherland and Burgman, 2015). Be ‘policy-relevant’ (NCCPE, 2018; Maddox, 1996; Green et al., 2009; Farmer, 2010; Kerr et al., 2015; Colglazier, 2016; Tesar et al., 2016; Echt, 2017b; Fleming and Pyenson, 2017; Olander et al., 2017; POST, 2017) (although this is rarely defined). Two exceptions include the advice for research programmes to be embedded within national and regional governmental programmes (Walley et al., 2018) and for researchers to provide policymakers with models estimating the harms and benefits of different policy options (Basbøll, 2018) (Topp et al., 2018).

Academics should engage in more effective dissemination, (NCCPE, 2018; Maddox, 1996; Green et al., 2009; Farmer, 2010; Kerr et al., 2015; Colglazier, 2016; Tesar et al., 2016; Echt, 2017b; Fleming and Pyenson, 2017; Olander et al. 2017; POST, 2017), make data public, (Malakoff, 2017), and provide clear summaries and syntheses of problems and solutions (Maybin, 2016). Use a range of outputs (social media, blogs, policy briefs), to make sure that policy actors can contact you with follow up questions (POST, 2017) (Parry-Davies and Newell, 2014), and to write for generalist, but not ignorant readers (Hillman, 2016). Avoid jargon but don’t over-simplify (Farmer, 2010; Goodwin, 2013); make simple and definitive statements (Brumley, 2014), and communicate complexity (Fischoff, 2015; Marshall and Cvitanovic, 2017) (Whitty, 2015).

Some blogs advise academics to use established storytelling techniques to persuade policymakers of a course of action or better communicate scientific ideas. Produce good stories based on emotional appeals or humour to expand and engage your audience (Evans, 2013; Fischoff, 2015; Docquier, 2017; Petes and Meyer, 2018). Jones and Crow develop a point-by-point guide to creating a narrative through scene-setting, casting characters, establishing a plot, and equating the moral with a ‘solution to the policy problem’ (Jones and Crow, 2017; Jones and Crow, 2018).

Academics are advised to get to know how policy works, and in particular to accept that the normative technocratic ideal of ‘evidence-based’ policymaking does not reflect the political nature of decision-making (Tyler, 2013; Echt, 2017a). Policy decisions are ultimately taken by politicians on behalf of constituents, and technological proposals are only ever going to be part of a solution (Eisenstein, 2017). Some feel that science should hold a privileged position in policy (Gluckman, 2014; Reed and Evely, 2016) but many recognise that research is unlikely to translate directly into an off-the-shelf ready-to-wear policy proposal (Tyler, 2013; Gluckman, 2014; Prehn, 2018), and that policy rarely changes overnight (Marshall and Cvitanovic, 2017). Being pragmatic and managing one’s expectations about the likely impact of research on policy—which bears little resemblance to the ‘policy cycle’—is advised (Sutherland and Burgman, 2015; Tyler, 2013).

Second, learn the basics, such as the difference between the role of government and parliament, and between other types of policymakers (Tyler, 2013). Note that your policy audience is likely to change on a yearly basis if not more frequently (Hillman, 2016); that they have busy and constrained lives (Lloyd, 2016; Docquier, 2017; Prehn, 2018) and their own career concerns and pathways (Lloyd, 2016; Docquier, 2017; Prehn, 2018). Do not guess what might work; take the time to listen and learn from policy colleagues (Datta, 2018).

Third, learn to recognise broader policymaking dynamics, paying particular attention to changing policy priorities (Fischoff, 2015; Cairney, 2017). Academics are good at placing their work in the context of the academic literature, but also need to situate it in the “political landscape” (Himmrich, 2016). To do so means taking the time to learn what, when, where and who to influence (NCCPE, 2018; Marshall and Cvitanovic, 2017; Tilley et al., 2017) and getting to know audiences (Jones and Crow, 2018); learning about, and maximising use of established ways to engage, such as in advisory committees and expert panels (Gluckman, 2014; Pain, 2014; Malakoff, 2017; Hayes and Wilson, 2018) (Pain, 2014). Persistance and patience is advised—sticking at it, and changing strategy if it is not working (Graffy, 1999; Tilley et al., 2017).

Prehn uses the phrase ‘professional friends’, which encapsulates vague but popular concepts such as ‘build trust’ and ‘develop good relationships’ (Farmer, 2010; Kerr et al., 2015; Prehn, 2018). Building and maintaining long-term relationships takes effort, time and commitment (Goodwin, 2013; Maybin, 2016), can be easily damaged. It can take time to become established as a “trusted voice” (Goodwin, 2013) and may require a commitment to remaining non-partisan (Morgan et al. 2001). Therefore, build routine engagement on authentic relationships, developing a genuine rapport by listening and responding (Goodwin, 2013; Jo Clift Consulting, 2016; Petes and Meyer, 2018). Some suggest developing leadership and communication skills, but with reference to listening and learning (Petes and Meyer, 2018; Topp et al., 2018); Adopting a respectful, helpful, and humble demeanour, recognising that while academics are authorities on the evidence, we may not be the appropriate people to describe or design policy options (Nichols, 1972; Knottnerus and Tugwell, 2017) (although many disagree (Morgan et al., 2001; Morandi, 2009)). Behave courteously by acting professionally (asking for feedback; responding promptly; following up meetings and conversations swiftly) (NCCPE, 2018; Goodwin, 2013; Jo Clift Consulting, 2016). Several commentators also reference the idea of ‘two cultures’ of policy and research (Shergold, 2011), which have their own language, practices and values (Goodwin, 2013). Learning to speak this language would enable researchers to better understand all that is said and unsaid in interactions (Jo Clift Consulting, 2016).

Reflecting on accessibility should prompt researchers to consider how to draw the line between providing information or recommendations. One possibility is for researchers to simply disseminate their research honestly, clearly, and in a timely fashion, acting as an ‘honest broker’ of the evidence base (Pielke, 2007). In this mode, other actors may pick up and use evidence to influence policy in a number of ways—shaping the debate, framing issues, problematizing the construction of solutions and issues, explaining the options (Nichols, 1972; Knottnerus and Tugwell, 2017)—while researchers seek to remain ‘neutral’. Another option is to recommend specific policy options or describe the implications for policy based on their research (Morgan et al., 2001; Morandi, 2009), perhaps by storytelling to indicate a preferred course of action (Evans, 2013; Fischoff, 2015; Docquier, 2017; Petes and Meyer, 2018). However, the boundary between these two options is very difficult to negotiate or identify in practice, particularly since policymakers often value candid judgements and opinions from people they trust, rather than new research (Maybin, 2016).

Getting to know policymakers better and building longer term networks (Chapman et al., 2015; Evans and Cvitanovic, 2018) could give researchers better access to opportunities to shape policy agendas (Colglazier, 2016; Lucey et al., 2017; Tilley et al., 2017), give themselves more credibility within the policy arena (Prehn, 2018), help researchers to identify the correct policy actors or champions to work with (Echt, 2017a), and provide better insight into policy problems (Chapman et al., 2015; Colglazier, 2016; Lucey et al., 2017; Tilley et al., 2017). Working with policymakers as early as possible in the process helps develop shared interpretations of the policy problem (Echt, 2017b; Tyler, 2017) and agreement on the purpose of research (Shergold, 2011). Co-designing, or otherwise doing research-for-policy together is widely held to be morally, ethically, and practically one of the best ways to achieve the elusive goal of getting evidence into policy (Sebba, 2011; Green, 2016; Eisenstein, 2017). Engaging publics more generally is also promoted (Chapman et al., 2015). Relationship-building activities require major investment and skills, and often go unrecognised (Prehn, 2018), but may offer the most likely route to get evidence into policy (Sebba, 2011; Green, 2016; Eisenstein, 2017). Initially, researchers can use blogs and social media (Brumley, 2014; POST, 2017) to increase their visibility to the policy community, combined with networking and direct approaches to policy actors (Tyler, 2013).

One of the few pieces built on a case study of impact argued that academics should build coalitions of allies, but also engage political opponents, and learn how to fight for their ideas (Coffait, 2017). However, collaboration can also lead to conflict and reputational damage (De Kerckhove et al., 2015). Therefore, when possible, academics should produce ground rules acceptable to academics and policymakers. They should be honest and thoughtful about how, when, and why to engage; and recognise the labour and resources required for successful engagement (Boaz et al., 2018). Successful engagement may require all parties to agree about processes, including ethics, consent, and confidentiality, and outputs, including data, intellectual property (De Kerckhove et al., 2015; Game et al., 2015; Hutchings and Stenseth, 2016). The organic development of these networks and contacts takes time and effort, and should be recognised as assets, particularly when offered new contacts by colleagues (Evans and Cvitanovic, 2018; Boaz et al., 2018)

Much of the ‘how to’ advice projects an image of a daring, persuasive scientist, comfortable in policy environments and always available when needed (Datta, 2018), by using mentors to build networks, or through ‘cold calling’ (Evans and Cvitanovic, 2018). Some ideas and values need to be fought for if they are to achieve dominance (Coffait, 2017; Docquier, 2017), and multiple strategies may be required, from leveraging trust in academics to advocating more generally for evidence based policy (Garrett, 2018). Academics are advised to develop “media-savvy” skills (Sebba, 2011), learn how to “sell the sizzle”(Farmer, 2010), become able to “convince people who think differently that shared action is possible,” (Fischoff, 2015), but also be pragmatic, by identifying real, tangible impacts and delivering them (Reed and Evely, 2016). Such a range of requirements may imply that being constantly available, and becoming part of the scenery, makes it more likely for a researcher to be the person to hand in an hour of need (Goodwin, 2013). Or, it could prompt a researcher to recognise their relative inability to be persuasive, and to hire a ‘knowledge broker’ to act on their behalf (Marshall and Cvitanovic, 2017; Quarmby, 2018).

Academics may be a good fit in the policy arena if they ‘want to be in real world’, ‘enjoy finding solutions to complex problems’ (Echt, 2017a; Petes and Meyer, 2018), or are driven ‘by a passion greater than simply adding another item to your CV’ (Burgess, 2005). They should be genuinely motivated to take part in policy engagement, seeing it as a valuable exercise in its own right, as opposed to something instrumental to merely improve the stated impact of research (Goodwin, 2013). For example, scientists can “engage more productively in boundary work, which is defined as the ways in which scientists construct, negotiate, and defend the boundary between science and policy” (Rose, 2015). They can converse with policymakers about how science and scientific careers are affected by science policy, as a means of promoting more useful support within government (Pain, 2014). Or, they can use teaching to get students involved at an early stage in their careers, to train a new generation of impact-ready entrepreneurs (Hayes and Wilson, 2018). Such a profound requirement of one’s time should prompt constant reflection and refinement of practice. It is hard to know what our impact may be or how to sustain it (Reed and Evely, 2016). Therefore, academics who wish to engage must learn and reflect on the consequences of their actions (Datta, 2018; Topp et al., 2018).

Our observation of this advice is that it is rather vague, very broad, and each theme contains a diversity of opinions. We also argue that much of this advice is based on misunderstandings about policy processes, and the roles of researchers and policymakers. We summarise these misunderstandings below (see Table 2 for an overview), by drawing a wider range of sources such as policy studies literature (Cairney, 2016) and a systematic review of factors influencing evidence use in policy (Oliver et al., 2014), to identify the wider context in which to understand and use these tips. We also contextualise these discussions in the broader evidence and policy/practice literature.

Table 2 Similarities and differences in the ‘how to’, empirical, and policy studies literatureFirstly, there is no consensus over what counts as good evidence for policy (Oliver and de Vocht, 2015), and therefore how best to communicate good evidence. While we can probably agree what constitutes high quality research within each field, the criteria we use to assess it in many disciplines (such as generalisability and methodological rigour) have far lower salience for policymakers (Hammersley, 2013; Locock and Boaz, 2004). They do not adhere to the scientific idea of a ‘knowledge deficit’ in which our main collective aim is to reduce policymaker uncertainty by producing more of the best scientific evidence (Crow and Jones, 2018). Rather, evidence garners credibility, legitimacy and usefulness through its connections to individuals, networks and topical issues (Cash et al., 2003; Boaz et al., 2015; Oliver and Faul, 2018).

One way in which to understand the practical outcome of this distinction is to consider the profound consequences arising from the ways in which policymakers address their ‘bounded rationality’ (Simon, 1976; Cairney and Kwiatkowski, 2017). Individuals seek cognitive shortcuts to avoid decision-making ‘paralysis’—when faced with an overwhelming amount of possibly-relevant information—and allow them to process information efficiently enough to make choices (Gigerenzer and Selten, 2001). They combine ‘rational’ shortcuts, including trust in expertise and scientific sources, and ‘irrational’ shortcuts, to use their beliefs, emotions, habits, and familiarity with issues to identify policy problems and solutions (see Haidt, 2001; Kahneman, 2011; Lewis, 2013; Baumgartner, 2017; Jones and Thomas, 2017; Sloman and Fernbach, 2017). Therefore, we need to understand how they use such shortcuts to interpret their world, pay attention to issues, define issues as policy problems, and become more or less receptive to proposed solutions. In this scenario, effective policy actors—including advocates of research evidence—frame evidence to address the many ways to interpret policy problems (Cairney, 2016; Wellstead et al. 2018) and compete to draw attention to one ‘image’ of a problem and one feasible solution at the expense of the competition (Kingdon and Thurber, 1984; Majone, 1989; Baumgartner and Jones, 1993; Zahariadis, 2007). This debate determines the demand for evidence.

Secondly, there is little empirical guidance on how to gain the wide range of skills that researchers and policymakers need, to act collectively to address policymaking complexity, including to: produce evidence syntheses, manage expert communities, ‘co-produce’ research and policy with a wide range of stakeholders, and be prepared to offer policy recommendations as well as scientific advice (Topp et al., 2018). The list of skills includes the need to understand the policy processes in which you engage, such as by understanding the constituent parts of policymaking environments (John, 2003, p. 488; (Cairney and Heikkila, 2014), p. 364–366) and their implications for the use of evidence:

A one-size fits-all model is unlikely to help researchers navigate this environment where different audiences and institutions have different cultures, preferences and networks. Gaining knowledge of the complex policy context can be extremely challenging, yet the implications are profoundly important. In that context, theory-informed studies recommend investing your time over the long term, to build up alliances, trust in the messenger, knowledge of the system, and exploit ‘windows of opportunity’ for policy change (Cairney, 2016, p.124). However, they also suggest that this investment of time may pay off only after years or decades—or not at all (Cairney and Oliver, 2018).

This context could have a profound impact on the way in which we interpret the eight tips. For example, it may:

Throughout this process, we need to decide what policy engagement is for—whether it is to frame problems or simply measure them according to an existing frame—and how far researchers should go to be useful and influential. While immersing oneself fully in policy processes may be the best way to achieve credibility and impact for researchers, there are significant consequences of becoming a political actor (Jasanoff and Polsby, 1991; Pielke, 2007; Haynes et al., 2011; Douglas, 2015). The most common consequences include criticism within one’s peer-group (Hutchings and Stenseth, 2016), being seen as an academic ‘lightweight’ (Maynard, 2015), and being used to add legitimacy to a policy position (Himmrich, 2016; Reed and Evely, 2016; Crouzat et al., 2018). More serious consequences include a loss of status completely—David Nutt famously lost his advisory role after publicly criticising UK government drug policy—and the loss of one’s safety if adopting an activist mindset (Zevallos, 2017). If academics need to go ‘all in’ to secure meaningful impact, we need to reflect on the extent to which they have the resources and support to do so.

These misunderstandings matter, because well-meaning people are giving recommendations that are not based on empirical evidence, and may lead to significant risks, such as reputational damage and wasted resources. Further, their audience may reinforce this problem by holding onto deficit models of science and policy, and equating policy impact with a simple linear policy cycle. When unsuccessful, despite taking the ‘how to’ advice to heart, researchers may blame politics and policymakers rather than reflecting on their own role in a process they do not understand fully.

Although it is possible to synthesise the ‘how to’ advice into eight main themes, many categories contain a wide range of beliefs or recommendations within a very broad description of qualities like’ accessibility’ and ‘engagement’. We interrogate key examples to identify the wide range of (potentially contradictory) advice about the actual and desirable role of researchers in politics: whether to engage, how to engage, and the purpose of engagement.

A key area of disagreement was over the normative question of whether academics should advocate for policy positions, try to persuade policymakers of particular courses of action (e.g., Tilley et al., 2017), offer policy implications from their research (Goodwin, 2013), or be careful not to promote particular methods and policy approaches (Gluckman, 2014; Hutchings and Stenseth, 2016; Prehn, 2018). Aspects of the debate include:

Such debates imply a choice to engage and do not routinely consider the unequal effects built on imbalances of power (Cairney and Oliver, 2018). Many researchers are required to show impact and it is not strictly a choice to engage. Further, there are significant career costs to engagement, which are relatively difficult to incur by more junior or untenured researchers, while women and people of colour may be more subject to personal abuse or exploitation. The risk of burnout, or the opportunity cost of doing impact rather than conducting the main activities of teaching and research jobs is too high for many (Graffy, 1999; Fischoff, 2015). Being constantly available, engaging with no clear guarantee of impact or success, with no payment for time or even travel is not possible for many researchers, even if that is the most likely way to achieve impact. This means that the diversity of voices available to policy is limited (Oliver and Faul, 2018). Much of the ‘how to’ advice is tailored to individuals without taking into account these systemic issues. They are mostly drawn from the experiences of people who consider themselves successful at influencing policy. The advice is likely to be useful mostly to a relatively similar group of people who are confident, comfortable in policy environments, and have both access and credibility within policy spaces. Thus, the current advice and structures may help reproduce and reinforce existing power dynamics and an underrepresentation of women, BAME, and people who otherwise do not fit the very narrow mould (Cairney and Oliver, 2018)—even extending to the exclusion of academics from certain institutions or circles (Smith and Stewart, 2017).

A second dilemma is: how should academics try to influence policy? By merely stating the facts well, telling stories to influence our audience more, or working with our audience to help produce policy directly? Three main approaches were identified in the reviews. Firstly, to use specific tools such as evidence syntheses, or social media, to improve engagement (Thomson, 2013; Caird et al., 2015). This approach fits with the ‘deficit’ model of the evidence-policy relationships, whereby researchers merely provide content for others to work with. As extensively discussed elsewhere, this method, while safe, has not been shown to be effective at achieving policy change; and underpinning much of the advice in this strain are some serious misunderstandings about the practicalities, psychology and real world nature of policy change and information flow (Sturgis and Allum, 2004; Fernández, 2016; Simis et al., 2016).

Secondly, to use emotional appeals and storytelling to craft attractive narratives with the explicit aim of shaping policy options (Jones and Crow, 2017; Crow and Jones, 2018). Leaving aside the normative question of the independence of scientific research, or researchers’ responsibilities to represent data fully and honestly (Pielke, 2007), this strategy makes practical demands on the researcher. It requires having the personal charisma to engage diverse audiences and seem persuasive yet even-handed. Some of the advice suggests that academics try to seem pragmatic and equable about the outcome of any such approach, although not always clear whether this was to help the researcher seem more worldly-wise and sensible, or simply as a self-protective mechanism (King, 2016). Either way, deciding how to seem omnipotent yet credible; humble but authoritative; straightforward yet not over-simplifying—all while still appearing authentic—is probably beyond the scope of most of our acting abilities.

Thirdly, to collaborate (Oliver et al., 2014). Co-production is widely hailed as the most likely way to promote the use of research evidence in policy, as it would enable researchers to respond to policy agendas, and enable more agile multidisciplinary teams to coalesce around topical policy problems. There are also trade-offs to this way of working (Flinders et al., 2016). Researchers have to cede control over the research agenda and interpretations. This can give rise to accusations of bias, partisanship, or at least partiality for one political view over another. There are significant reputational risks involved in collaboration, within the academic community and outside it. Pragmatically, there are practical and logistical concerns about how and when to maintain control of intellectual property and access to data. More broadly, it may cloud one’s judgement about the research in hand, hindering one’s ability to think or speak critically without damaging working relationships.

Authors do not always tell us the purpose of engagement before they tell us how to do it. Some warn against ‘tokenistic’ engagement, and there is plenty of advice for academics wanting to build ‘genuine’ rapport with policymakers to make their research more useful. Yet, it is not always clear if researchers should try and seem authentically interested in policymakers as a means of achieving impact or actually to listen, learn, and cede some control over the research process. The former can be damaging to the profession. As Goodwin points out, it’s not just policymakers who may feel short-changed by transactional relationships: “by treating policy engagement as an inconvenient and time-consuming ‘bolt on' you may close doors that could be left open for academics who genuinely care about this collaborative process” (Goodwin, 2013). The latter option is more radical. It involves a fundamentally different way of doing public engagement: one with no clear aim in mind other than to listen and learn, with the potential to transform research practices and outputs (Parry-Davies and Newell, 2014).

Although the literature helps us frame such dilemmas, it does not choose for us how to solve them. There are no clear answers on how scientists should act in relation to policymaking or the public (Mazanderani and Latour, 2018), but we can at least identify and clarify the dilemmas we face, and seek ways to navigate them. Therefore, it is imperative to move quickly from basic ‘how to’ advice towards a deeper understanding of the profound choices that shape careers and lives.

Academics are routinely urged to create impact from their research; to change policy, practice, and even population outcomes. There are, however, few empirical evaluations of strategies to enable academics to create impact. This lack of empirical evidence has not prevented people from offering advice based on their personal experience, rather than concrete evaluations of strategies to increase impact. Much of the advice demonstrates a limited understanding or description of policy processes and the wider social aspects of ‘doing’ science and research. The interactions between knowledge production and use may be so complex that abstract ‘how to’ advice is limited in use. The ‘how to’ advice has a potentially immense range, from very practical issues (how long should an executive summary be?) to very profound (should I risk my safety to secure policy change?), but few authors situate themselves in that wider context in which they provide advice.

There are some more thoughtful approaches which recognise more complex aspects of the task of influencing policy: the emotional, practical and cognitive labour of engaging; that it often goes unrewarded by employers; that impact is never certain, so engagement may remain unrewarded; and, that our current advice, structures and incentives have important implications for how we think about the roles and responsibilities of scientists when engaging with publics. Some of the ‘how to’ literature also considers the wider context of research production and use, noting that the risks and responsibilities are borne by individuals and, for example, one individual cannot possibly to get to know the whole policy machinery or predict the consequences of their engagement on policy or themselves. For example, universities, funders and academics are advised to develop incentives, structures to make ‘impact’ happen more easily (Kerr et al., 2015; Colglazier, 2016); and remove any actual or perceived penalisation of ‘doing’ public engagement (Maynard, 2015). Some suggest universities should move into the knowledge brokerage space, acting more like think-tanks (Shergold, 2011) by creating and championing policy-relevant evidence (Tyler, 2017), and providing “embedded gateways” which offer access to credible and high-quality research (Green, 2016). Similarly, governments have their own science advisory system which, they are advised, should be both independent, and inclusive and accountable (Morgan et al., 2001; Malakoff, 2017). Government and Parliament need to be mindful about the diversity of the experts and voices on which they draw. For example, historians and ethicists could help policymakers question their assumptions and explore historical patterns of policies and policy narratives in particular areas (Evans, 2013; Haddon et al., 2015) but economics and law have more currency with policymakers (Tyler, 2013).

However, we were often struck by the limited range of advice offered to academics, many of whom are at the beginning of their careers. This gap may leave each generation of scientists to fight the same battles, and learn the same lessons over again. In the absence of evidence about the effectiveness of these approaches, all one can do is suggest a cautious, learning approach to coproduction and engagement, while recognising that there is unlikely to be a one-size-fits all model which would lead to simple, actionable advice. Further, we do not detect a coherent vision for wider academy-policymaker relations. Since the impact agenda (in the UK, at least) is unlikely to recede any time soon, our best response as a profession is to interrogate it, shape and frame it, and to help us all to find ways to navigate the complex practical, political, moral and ethical challenges associated with being researchers today. The ‘how to’ literature can help, but only if authors are cognisant of their wider role in society and complex policymaking systems.

For some commentators, engagement is a safe choice tacked onto academic work. Yet, for many others, it is a more profound choice to engage for policy change while accepting that the punishments (such as personal threats or abuse) versus rewards (such as impact and career development opportunities) are shared highly unevenly across socioeconomic groups. Policy engagement is a career choice in which we seek opportunities for impact that may never arise, not an event in which an intense period of engagement produces results proportionate to effort.

Overall, we argue that the existing advice offered to academics on how to create impact is not based on empirical evidence, or on good understandings of key literatures on policymaking or evidence use. This leads to significant misunderstandings, and advice which can have potentially costly repercussions for research, researchers and policy. These limitations matter, as they lead to advice which fails to address core dilemmas for academics—whether to engage, how to engage, and why—which have profound implications for how scientists and universities should respond to the call for increased impact. Most of these tips focus on the individuals, whereas engagement between research and policy is driven by systemic factors.

The datasets generated during and/or analysed during the current study are not publicly available but are available from the corresponding author on reasonable request.