This study concerns the development and validation of a questionnaire to measure primary school Teachers’ Attitudes towards New Technology use in teaching (TANT). Many researchers, policy makers and educators have emphasized the importance of using new technology in teaching. However, no instrument is available to measure teachers’ attitudes towards using new technology in teaching. In a previous literature study (authors), we used the well-known Theory of Planned Behaviour to identify and structure eight underlying factors that make up primary school teachers’ attitudes towards using technology in teaching. In the current study we aim to measure these factors. To that end, we developed eight corresponding scales, as well as a scale to measure primary school teachers’ use of new technology. Results of the validation study among 659 pre- and in-service teachers showed adequate convergent and discriminant validity for six attitudinal factors and teachers’ use of new technology. In addition, we explored the predictive validity of the attitudinal factors for explaining variability in teachers’ use of new technology and established configural, metric and scalar measurement invariance.

Avoid common mistakes on your manuscript.

The importance of teaching young learners to work with new technology to prepare them for their (working) lives in a technology-dominated society has been emphasized by many researchers, educators and policy makers (International Society for Technology in Education, 2008; Panossian 2016). Consequently, primary school teachers are expected to use new technology in their teaching. However, meaningful integration of technology by teachers is generally slow (Tondeur et al. 2017). Many teachers use technology to provide classroom instruction (e.g., the interactive whiteboard) and to let students practice routines (e.g., drill-and-practice software) instead of using technology to stimulate children’s own analysis, knowledge construction, or problem solving (Ertmer et al. 2015; Smeets 2020).

Previous work has shown that teachers’ attitudes towards technology use may affect the integration of technology in educational practise (e.g. Ottenbreit-Leftwich et al. 2018; Scherer et al. 2018). Although many self-report instruments exist that aim to measure teachers’ attitudes towards using technology in their teaching, to our knowledge, a valid and reliable instrument measuring teachers’ attitude towards using new technology does not yet exist. In addition, current instruments measuring teachers’ attitudes towards technology use face several problems. First, a definition of the construct of attitude is lacking or incomplete (e.g. Steiner and Mendelovitch 2017), the subcomponents of attitude that are measured are not explained (e.g. Sami Konca et al. 2016), or a distinction is not made between attitudes and other related concepts such as interest or motivation (e.g. Meishar-Tal and Ronen 2016). This relates to a second issue, which is that for many instruments the psychometric quality is unclear or not established using statistical validation procedures (e.g. Pittman and Gaines 2015; Domingo and Garganté 2016). This is especially problematic, because unless the reliability and validity of an instrument are determined, it is impossible to estimate the value of the results. Third, the object of the attitude is not always clearly defined. For example, researchers have sometimes measured teachers’ attitudes towards technology use in general (e.g. Christensen and Knezek 2009; Teo et al. 2017), rather than their attitudes towards using technology in teaching.

Some of these problems may be caused by the lack of a strong theoretical basis underlying the development of these instruments. To address this, we have used the well-known Theory of Planned Behaviour (TPB) developed by Ajzen (1991, 2001) to identify and structure underlying factors that make up primary school teachers’ attitudes towards using technology in teaching (Authors). It seems reasonable to assume that some of these factors are also influential when exploring teachers’ attitudes towards using new technology in their teaching. We therefore use this theoretical basis to develop an instrument that can be used to measure teachers’ attitudes towards using new technology in teaching (The TANT questionnaire). New technology is a difficult concept to define and specify. What is new in one context or for one person, might be outdated in another context or for another person. In section 2.2. we explain how we define new technology in this study.

The TANT questionnaire can be used to help us understand why some teachers implement new technology in their teaching, while other teachers might not. Some teachers might think that new technology does not add much to students’ learning, which might be a well-substantiated professional opinion, whereas others might not feel capable enough to use new technology, even if they think it might benefit students’ learning. Such insights might provide starting points for tailored teacher training programs to support teachers in teaching with new technology. A valid and reliable instrument can be used to evaluate the effects of such training programs on teachers’ attitudes towards using new technology.

Furthermore, we added a scale to measure primary school teachers’ use of new technology to explore the relationships between the attitudinal factors and teachers’ actual new technology use since we still know little about the impact of attitude on teachers’ actual use of technology. In a meta-analytic review Scherer et al. (2020) found that several attitudinal subcomponents influence teachers’ intention to use technology. However, there was insufficient evidence to conclude that this intention results in actual use of technology. Using a valid and reliable instrument to measure teachers’ attitudes towards new technology and combining this with measures of actual use of new technology might help fill this void in research. Based on the considerations described above, we aim to develop and evaluate the validity and reliability of an instrument that can be used to measure primary school teachers’ attitudes towards using new technology in teaching and teachers’ actual new technology use.

According to Ajzen (2001), “attitude represents a summary evaluation of a psychological object (the ‘attitude-object’), captured in such attribute dimensions as good-bad, harmful-beneficial, pleasant-unpleasant, and likeable-dislikeable” (p. 28). An attitude-object is the entity about which an attitudinal evaluation is made (Ajzen 1991, 2001) and is usually a specific behaviour. In this study, the attitude-object is the use of new technology in teaching.

The TPB describes attitude based on three dimensions: (1) perceptions of behavioural attributes, refers to (cognitive) beliefs and (affective) feelings someone associates with the specific behaviour, (2) perceptions of social norms, refers to the perceived social acceptability of the behaviour; (3) perceptions of behavioural control, refers to the perception someone has about the level of control someone has in performing the behaviour. This perception of control can refer to external factors such as availability of time and resources or to internal factors such as the perceived capability of enacting the specific behaviour (often defined as ‘self-efficacy’ based on Bandura’s concept). These dimensions are comprised of factors that are specific for each attitude-object. The evaluation of each of these factors may influence, in various degrees, the overall attitude towards the attitude-object (Ajzen 1991).

The term new technology is problematic since something that is new for one person may not necessarily be new for another person. In this study we took the teachers’ context and perspectives as a starting point for what could be considered new. ‘New’ depends on what technology is available to teachers (context), how they perceive that technology can enhance their teaching practice to support student learning and whether they are aware of the potential of different technologies. Dede (2000) already reflected on the capabilities of technology to support teaching and learning, such as mirroring authentic problems or high-tech workplaces (e.g., games, augmented and virtual reality), enhancing students’ collaborative skills in online communities of practice (e.g., social media, knowledge forums); modeling and visualization of complex concepts (e.g., simulations, virtual reality) and facilitating the development of higher-order skills through guided inquiry learning (e.g. programming software, simulations, robots). However, most teachers make limited use of these affordances of technology for teaching and learning (Ottenbreit-Leftwich et al. 2018). Studies in the Netherlands (Voogt et al. 2016; Smeets 2020) showed that teachers mostly used the interactive whiteboard and hardly used other technologies (e.g., robots, virtual reality) to enhance their teaching practices. International research confirms the limited use of new technology by teachers (Fraillon et al. 2018; Backfish et al. 2021). The results of these studies might indicate that many teachers are not aware of the potential of various technologies to enhance their teaching or are unsure about how such technologies can be used to enhance student learning. This made it hard to define ‘new technology’ for this study. We decided to focus on the use of technology to enhance teachers’ teaching practices and to provide examples of technologies that are currently hardly used by (Dutch) teachers. This resulted in the following explanation of new technology, which we provided to the teachers who completed the TANT questionnaire: “New technology refers to hardware and software that teachers can use to support and/or enrich their teaching practices. Some examples of hardware are: smartphones, tablets, 3D printers and educational robots (BeeBot, DASH). Software examples are: simulation software, design software, programming software and video-editing software.”

Based on the considerations described above, we aimed to answer the following research questions in this study:

We used Trochim and Donnelly’s (2006) framework for construct validity to guide the validation of our questionnaire (see Velayutham et al. 2011 for a detailed description of the application of this framework). Trochim and Donnelly state that a construct must fulfil both translation and criterion validity requirements. Translation validity is determined by content validity (whether the construct is theoretically sound and provides a proper representation of the construct) and face validity (whether the items used to measure the construct clearly reflect the construct and are interpreted as intended by the participants). Criterion validity is determined by convergent validity (whether the items used to measure the same construct are highly correlated with each other), discriminant validity (whether items used to measure different constructs are not correlated with each other), predictive validity (whether the construct is able to predict something it should theoretically be able to predict) and concurrent validity (whether the construct can be used to distinguish between groups that it should theoretically be able to distinguish). This means that an instrument has high construct validity if its content, face, convergent, discriminant, predictive and concurrent validity can be established.

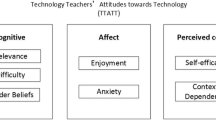

As described in the introduction, we used the TPB to develop a theoretical framework that describes factors that make up primary school teachers’ attitudes towards using technology in teaching (Authors). These factors are perceived usefulness, perceived ease of use, perceived relevance, anxiety, enjoyment, self-efficacy, context dependency and subjective norms. We aim to measure these eight factors with the TANT questionnaire. In addition, we added a scale to measure teachers’ actual use of new technology in teaching.

When designing the questionnaire, several previously developed questionnaires that aimed to measure (some of) the subcomponents identified in our theoretical framework were critically reviewed in order to determine whether the items used in those questionnaires would suit our purposes (Christensen and Knezek 2009, 2017; Van Aalderen-Smeets and Walma van der Molen 2013; Melocchi 2014; Heitink et al. 2016; Teo et al. 2016; Admiraal et al. 2017; Farjon et al. 2019).

Based on an exploration of those questionnaires, we selected items that we regarded as suitable to use as a basis for designing our items. When designing questionnaire items several criteria and considerations are important, such as item wording, sequence and format (Schwarz 2008). Based on these criteria, most of the items that we had selected were adjusted to some extent.

Perceived usefulness (PU) refers to teachers’ beliefs about the usefulness of new technology for improving and/or enriching their teaching and the learning of their students. We selected four items used by Melocchi (2014) for measuring the component ‘perceived usefulness’ (Cronbach’s alpha α = 0.97). These items were based on the Unified Theory of Acceptance and Use of Technology (UTAUT) instrument (Venkatesh et al. 2003) and are similar to items used by Teo et al. (2016). We revised the original items to better fit the context of our study (using new technology in teaching), to make the items more personal (I think that…) and to address more specific teaching activities. For example, an item used by Melocchi (2014), ‘Utilizing iPad technology in my classes improves my job performance’, was changed to: ‘I think that my students’ learning results will improve when I use new technology in my lessons’.

Perceived ease of use (PEU) refers to teachers’ beliefs about the ease or difficulty of using new technology in their teaching. We selected three items used by Teo et al. (2016) to measure ‘perceived ease of use’ (composite reliability = 0.98). We adjusted these items to fit our context and to make the items more strongly worded. For example, the item ‘My interaction with technology does not require much effort’ was changed to ‘I think that the use of new technology in my lessons requires very little effort.’

Perceived relevance (PR) refers to teachers’ beliefs about the importance of using new technology in their teaching to prepare learners for later life. We selected the three items with the highest factor loadings (>0.80) from the scale measuring ‘significance’ (Cronbach’s α = 0.87) in the Teachers’ Attitudes towards Computers (TAC) questionnaire (version 6) used by Christensen and Knezek (2009) to measure PR. We adjusted these items to make them more personal and to better fit the context of our study. Furthermore, we expected that most teachers would find the use of new technology at least somewhat relevant. To prevent a ceiling effect, we made the items more strongly worded. A resulting example item is: ‘I think it is crucial that students understand the role of new technology in society’.

Feelings of anxiety (AX) or fear are known to be important barriers for teachers to use technology in their teaching (Yaghi and Abu-Saba 1998). We selected three items from the scale measuring ‘comfort’ (Cronbach’s α = 0.88) used by Farjon et al. (2019; see also Christensen and Knezek 2009) to measure AX. The only change we made to those items was replacing ‘ICT’ with ‘new technology’.

Positive feelings, such as enjoyment (EY), when using technology in teaching may motivate teachers to use technology. We selected four items from the scale measuring ‘interest’ (Cronbach’s α = 0.90) in the TAC questionnaire (version 6) used by Christensen and Knezek (2009) to measure EY. Again, we made some changes to better fit the context of our study and to make the items more personal. For example, an item used by Christensen and Knezek (2009), ‘The challenge of learning about computers is exciting’, was changed to: ‘For me, the use of new technology in my lessons feels like a positive challenge’.

Self-efficacy (SE) refers to teachers’ self-perceived capability to use new technology in their teaching. We selected six items from the ‘Technological, Pedagogical, and Content-Knowledge (TPACK)-core questionnaire (Cronbach’s α = 0.91) used by Heitink et al. (2016) to measure SE. We adjusted these items in order to make them more strongly worded. For example, instead of using: ‘I am able to…’, we used ‘I am quite able to…’. Furthermore, we replaced ‘ICT’ with ‘new technology’.

Context dependency (CD) refers to teachers’ perceptions that external factors, such as the availability of technical resources, on-site support and available time, are a prerequisite for them to be able to use new technology. We used the items measuring ‘context-dependency’ (Cronbach’s α = 0.74) in the context of teaching science and technology (Van Aalderen-Smeets and Walma van der Molen 2013) as a basis for the development of items for measuring CD in this study. We adjusted those items to fit the context of this study and to include external factors that have been described as prerequisites for teachers’ technology use, such as the availability of ready-made assignments (e.g. Vatanartiran and Karadeniz 2015) and technical support (e.g. Khanlari 2016).

Subjective norms (SN) refer to teachers’ perceptions as to whether other people who are important to that teacher think it is good or bad to use new technology in teaching. These important others can be colleagues, the school administration, parents, or students. We selected four items to measure ‘subjective norms’ (Cronbach’s α = 0.89) as used by Admiraal et al. (2017). We adjusted these items to make them more personal (I have the feeling that…) and replaced ‘ICT’ with “new technology’.

To determine whether a teacher’s attitude towards using new technology is related to actual technology use, we added a separate section to measure teachers’ self-reported use of new technology in their teaching. This scale contained seven items; responses to these items were given on a 7-point Likert scale: (1) never, (2) a few times a year, (3) once a month, (4) a few times a month, (5) once a week, (6) several times a week, (7) every day (see Table 1).

Table 1 Factor structure for the TANT questionnaireAlthough there are several possibilities for measuring attitudes, we chose to develop a Likert-scale instrument, because such instruments have several benefits. First, they are suitable when including items organized in multiple subscales. Second, they can easily be distributed to a large group of participants. Third, the questions can be answered easily. Last, Likert scales allow parametric testing.

For the attitudinal scales, we used a 5-point Likert scale ranging from (1) strongly disagree to (5) strongly agree. There is some criticism of using a Likert scale with an uneven number of response options (Johns 2010) due to the difficulty of determining how participants interpret the midpoint of such a scale. This midpoint can be interpreted as: do not agree/do not disagree, neutral, no opinion, or do not know. Therefore, some argue for only using an even number of response options, thereby forcing participants to make a choice. However, forcing participants to make a choice may lead to skewed results. To avoid skewed results, we decided to use a 5-point Likert scale in which we labelled only the two extremes (strongly disagree–strongly agree), thereby presenting the response options as a sliding scale.

The first version of the TANT questionnaire, containing 39 items, was presented to five primary school teachers who evaluated every item on clarity and understandability in extended interviews. Furthermore, they indicated to what extent they considered the items appropriate for measuring the underlying constructs and they were asked to indicate whether any additional items were necessary. Based on these evaluations we made several changes. The most important one was reversing the items for the subscale ‘perceived ease of use’. Instead of using items such as: ‘I think it is very easy to use new technology in my lessons’, the items were changed to: ‘I think it is very difficult to use new technology in my lessons’. This was done because some of the teachers indicated that they felt their colleagues might perceive the use of new technology as difficult instead of easy, and that reversing the items would better fit their views. Furthermore, we added two items to the subscale ‘context dependency’. These items represented two other external factors that teachers might perceive as prerequisites for the use of new technology: support from colleagues and that every student has their own device.

The questionnaire (34 attitude items and 7 actual use items) was administered to a large group of pre- and in-service primary school teachers (N = 659) in the Netherlands. This group consisted of 257 in-service primary school teachers and 402 third- and fourth-year pre-service teachers. The effective sample size consisted of 136 (20.6%) males and 523 (79.4%) females. Participants’ age ranged from 18 to 65 years old (M = 29.52, SD = 12.69).

After approval by the ethical committee from the university of the first author, the questionnaire was administered. One of the researchers visited the primary schools (for in-service teachers) and teacher education colleges (for pre-service teachers). After a brief introduction and giving informed consent, teachers were directed to an online (84.2%) or paper-and-pencil version (15.8%) of the questionnaire, which took approximately 15 min to complete. Individual clarification was given if a participant did not understand a specific item.

Approximately 92.5% of the data were collected in this way. In a few cases, having the researcher visit the school was not possible. Therefore, a small number of participants received a link by email redirecting them to the online version of the questionnaire, which they completed on their own.

We used similar steps as Post and Walma van der Molen (2019) to explore the construct validity and reliability of the TANT questionnaire. First, we looked at the amount of missing data and investigated the range of responses and standard deviation of participants’ scores for each item. Next, we conducted an exploratory factor analysis (EFA) with half of the data in our sample in MPlus (Muthén and Muthén 1998-2015). This approach helped us identify latent factors underlying teachers’ attitudes towards using new technology in a data-driven way. As a next step, with the other half of the sample, we performed a confirmatory factor analysis (CFA) to test the model fit of the factor structure we found with EFA, again using MPlus.

The two subsamples that were used for the EFA and CFA (subsample A, n = 328 and subsample B, n = 331) were randomly extracted from the dataset, using the split file option in SPSS. In order to determine whether the subsamples were equivalent regarding the distribution of pre- and in-service teachers and the gender of the participants, chi-square tests were used. We chose to evaluate equivalence for these two variables, because Drossel et al. (2017) found that experience with using ICT in teaching and gender impact teachers’ technology use in the Netherlands. Results indicated that pre- and in-service teachers (χ 2 = 0.426, p = 0.514) and males and females (χ 2 = 0.505, p = 0.477) were equally distributed across both subsamples.

In subsample A, 0.2% of the data were missing, and in subsample B it was 0.3%. In the online version of the questionnaire, we used a ‘forced response’ option. This meant that participants had to respond to all items to complete the questionnaire. This was not the case for the paper-and-pencil version of the questionnaire but only a few participants did not answer one or more items in the paper-and-pencil version of the questionnaire. Since the percentages of missing data in our samples are very low, we did not conduct any additional analyses of our missing data. Both the EFA and CFA were performed using the raw data from both subsamples, including the missing data. We used the default option for handling missing data in MPlus.

Next, we calculated the standard deviation and range of responses for each item. The standard deviations should hover around 1.0 for a five-point Likert scale and each response option should be used at least once (Coulson 1992). Standard deviations ranged from 0.807 to 1.154 and all response options were used at least once for all items with a five-point Likert scale. For the 7-point Likert scales (self-reported behaviour), standard deviations ranged from 1.032 to 1.418 and again all response options were used at least once. The data were considered appropriate for factor analyses.

Using subsample A, iterative exploratory factor analyses were conducted to explore the factor structure of the attitudinal factors. We used maximum likelihood (ML) estimation, and since we expected the subscales to correlate, geomin oblique rotation was used (Field 2009). Items were deleted from further EFA’s if they showed a factor loading below 0.35 or cross loadings less than 0.15 from their greatest factor loading (Worthington and Whittaker 2006).

Four items developed to measure context dependency, two items to measure perceived usefulness and three items developed to measure enjoyment were deleted, due to low factor loadings or cross loadings. This resulted in only one item left to measure enjoyment. Since a factor cannot be represented by one item, this item was deleted, resulting in the elimination of the enjoyment factor. After removing problematic items, the EFA analysis revealed a six-factor structure (with enjoyment omitted), where a seven-factor structure had been anticipated.

Inspection of the factor loadings revealed that items developed to measure ‘perceived ease of use’ and ‘anxiety’ loaded together on one factor. When reviewing the items, this made sense because items from both factors were aimed at measuring negative aspects of new technology use. We therefore renamed this factor ‘perceived difficulty’. This resulted in the identification of the following six factors: perceived relevance (Eigenvalue 3.116), perceived usefulness (Eigenvalue 1.297), perceived difficulty (Eigenvalue 5.958), self-efficacy (Eigenvalue 2.327), context dependency (Eigenvalue 1.107), and subjective norms (Eigenvalue 1.704).

In addition, we conducted an EFA to explore the factor structure of the ‘new technology use’ factor. We again used ML estimation and geomin oblique rotation. Result of the EFA showed a one-factor structure (Eigenvalue 3.576) with factor loadings ranging from 0.586 to 0.753. Table 1 presents the observed factors of the TANT questionnaire.

We performed CFA with ML estimation to determine how well the data from subsample B fit the six-factor solution we identified with EFA. Several goodness-of-fit indices were used to determine model fit. These indices are standardized root-mean-square residual (SRMR), comparative fit index (CFI), Tucker-Lewis index (TLI), and the root-mean-square error of approximation (RMSEA). To indicate good fit, SRMR should be below 0.08 (Prudon 2015) and CFI and TLI should exceed 0.95. Furthermore, RMSEA values should be below 0.07, where lower values are indicative of better fit (Hooper et al. 2008). Since the χ 2 test has some shortcomings (see Hooper et al. 2008; Prudon 2015) we only report the χ 2 results for completeness. It should be noted that the above thresholds are used as guidelines and not as strict rules (Prudon 2015).

Furthermore, we calculated the average variance extracted (AVE), average shared variance (ASV), maximum shared variance (MSV) and composite reliability (CR) for each subscale as obtained by CFA to further estimate the discriminant and convergent validity (Raykoff, 1997; Carter 2016). Discriminant validity of a subscale is considered satisfactory when the AVE is greater than or equal to 0.50 and also greater than the ASV and MSV of the factor. For convergent validity, the CR value should be equal or greater than 0.70 and greater than the AVE of the subscale (Fornell and Larcker 1981).

Furthermore, the baseline model that is used for calculating incremental fit indices has the null hypothesis that all variables are uncorrelated (Hooper et al. 2008). Inspection of the correlation tables showed that the variables in the TANT questionnaire are correlated, ranging from r = 0.238 to r = 0.702. Therefore, we used the absolute fit indices (RMSEA, SRMR and χ 2 ) as the most important indicators for determining model fit.

The factor PU is represented by two items. Although it is generally agreed that three or more items should represent a factor, the use of two items is considered acceptable if the items are reasonably strongly correlated with each other (Eisinga et al. 2013) using the Spearman-Brown correlation. The two items for measuring PU were reasonably inter-correlated, \(\rho\) = 0.70. Therefore, the factor PU was included.

Composite reliability scores for all factors were above the threshold of 0.70 and exceeded the AVE scores for each respective subscale, indicating good internal consistency of the scales. Furthermore, AVE scores exceeded the ASV and MSV scores for each respective subscale, indicating good discriminant power of the scales (Table 2).

Table 2 Factor correlations, CR, AVE, MSV, and ASV for the TANT questionnaire subscales (N = 332)In addition, we calculated the correlations between the attitudinal factors, in order to gain insight into the relationships between these factors. The correlation matrix (Table 2) shows that PR was strongly positively correlated with PU. Although we could not find supporting research, this makes sense, because one can imagine that a teacher who is enthusiastic about the usefulness of new technology for teaching also wishes to teach their pupils how to use new technology so that they can benefit from it in later life.

Furthermore, PD had a moderately negative correlation with SE. This also makes sense, because perceptions of the difficulty of using the technology are likely to be related to self-efficacy for using new technology. Next, PD was moderately positively correlated with CD. It makes sense that perceptions about the difficulty of using technology in teaching may be related to the degree of support that teachers feel they need. Lastly, SE was moderately negatively correlated with CD. Again, one can imagine that a teacher with high SE in the use of new technology feels that they do not need much support (CD), or vice versa.

We performed regression analyses for each independent variable to investigate whether scores on the subscales had an impact on teachers’ self-reported use of new technology in their teaching. For these analyses we used all data. As can be seen from Table 3, each independent variable has a significant impact on teachers’ self-reported new technology use. However, only self-efficacy explained a reasonable amount of the variance in teachers’ new technology use (R 2 = 0.193).

Table 3 Regression analyses per variable (N = 659)A prerequisite for determining concurrent validity is the expectation, based on theory, that, in this context, different groups of teachers have different attitudes towards the use of new technology in teaching. If such differences exist, one should be able to distinguish between these groups based on the scores of the participants on the instrument. In our study, we included two groups: pre- and in-service primary teachers.

However, only a few studies have compared the attitudes of pre- and in-service teachers towards the use of technology in their teaching. For example Teo (2015) measured several attitudinal factors (PU, PEU, SN, SE) among pre- and in-service teachers. He found that pre-service teachers had higher mean scores on these factors than in-service teachers, but this difference was not significant. Similarly, Mai (2014) and Agyei and Voogt (2011) found no significant differences between pre- and in-service teachers’ attitudes towards using technology. These results seem to indicate that there are no significant differences in attitudes between pre- and in-service teachers. To evaluate whether the TANT questionnaire is a suitable instrument to study differences between pre- and in-service teachers’ attitudes towards using new technology, because both groups interpret the items and response scales in the same way, we evaluated the measurement invariance of the TANT questionnaire.

We conducted a multiple-group CFA to test the measurement invariance of the attitudinal factors (for a more in-depth explanation of measurement invariance, see Chen 2007). We explored configural invariance (i.e., similarity of the factor structures), metric invariance (i.e., similarity of factor loadings) and scalar invariance (i.e., similarity of factor intercepts) using the total respondent sample (N = 659).

In order to determine invariance, differences in χ 2 (i.e., △χ 2 ) between the different analyses (configural, metric, and scalar) are explored. However, because χ 2 is sensitive to sample size and model assumptions (e.g., linearity, multivariate normality; Byrne et al. 1989) we only report △χ 2 for completeness. Cheung and Rensvold (2002) recommended using the △CFI. In addition Chen (2007) recommended evaluating changes in RMSEA (△RMSEA) and SRMR (△SRMR) as well.

For establishing metric invariance, △CFI ≤ −0.01, △RMSEA ≤ 0.015 and △SRMR ≤ 0.03 indicate invariance. For scalar invariance, the △SRMR is different and should be ≤0.01. To establish metric invariance, fit indices of the metric invariance model were compared with fit indices of the configural invariance model. To establish scalar invariance, fit indices of the scalar invariance model were compared with fit indices of the metric invariance model.

As can be seen from Table 4, fit indices for the configural model indicated acceptable fit. Furthermore, the metric and scalar △-indices were all below the recommended thresholds, indicating metric and scalar invariance. Therefore, we conclude that the attitudinal factors measured with the TANT questionnaire may be used to compare scores from pre- and in-service teachers.

Table 4 Measurement invariance analysis for the attitudinal factorsTo test measurement invariance for ‘new technology use’ we again performed a multiple-group CFA. As can be seen from Table 5, most fit indices for the configural model indicated acceptable fit. However, RMSEA was above the recommended threshold of 0.07. To explore whether partial invariance could be established, we evaluated whether omission of one or more items resulted in a better fit of the configural model. Removing item 5: ‘How often do you use new technology to connect learning in school with learning outside of school (such as a museum visit or environmental research)?’ resulted in a better fit for the configural model. However, △CFI for scalar invariance was still above the recommended threshold if item 5 was omitted. We therefore conclude that configural and metric invariance could be established for new technology use if item 5 was excluded, but scalar invariance could not. Therefore, comparing pre- and in-service teachers’ scores on new technology use should be done with care.

Table 5 Measurement invariance analysis for new technology useTo gain insight into the scores of pre- and in-service primary school teachers on the factors of the TANT questionnaire, we calculated the unweighted average scores for each attitudinal factor (see Table 6). These average scores are an indication of pre- and in-service primary school teachers’ attitudes towards using new technology. However, we do not compare pre- and in-service primary school teachers’ scores because that would require more in-depth analyses, which is outside the scope of this paper.

Table 6 Unweighted average scores for each attitudinal componentThese unweighted averages scores indicate that overall, pre- and in-service primary school teachers regarded it relevant to use new technology in their teaching to prepare students for their later life (above midpoint 3), believed new technology is a beneficial tool for teaching, viewed the use of new technology in their teaching as not very difficult, had somewhat low feelings of self-efficacy, felt somewhat dependent on contextual factors and felt that their social environment is neutral about the use of new technology in teaching.

Both pre- and in-service teachers use new technology most often for letting students present to each other what they have learned. In-service teachers use new technology the least for letting students collect and analyse data and pre-service teachers use new technology the least for letting students do programming. Their self-reported use indicates that, overall, they used new technology in their teaching only a few times a year (see Table 7).

Table 7 Mean scores on new technology use itemsThe goal of this study was to develop and evaluate the validity and reliability of an instrument that can be used to measure primary school teachers’ attitudes towards using new technology in teaching and teachers’ actual new technology use. Although many self-report instruments exist that can be used to measure teachers’ attitudes towards using technology, many instruments face several issues (as described in detail in the introduction). Some of these issues may be caused by a lack of a strong theoretical basis. We aimed to address this by using the well-known Theory of Planned Behavior (Ajzen 1991, 2001) to identify attitudinal factors that make up teachers’ attitude towards using technology, in an earlier study (Authors). We aimed to measure these attitudinal factors with the TANT questionnaire.

To answer our first research question, (RQ1: To what extent can the TANT questionnaire be used to validly and reliably measure pre- and in-service primary school teachers’ attitudes towards new technology) we used both a data-driven (EFA) and theory-driven (CFA) approach to evaluate the construct validity and reliability of the TANT questionnaire. The results of these analyses show that the construct validity and reliability for the factors perceived relevance, perceived usefulness, perceived difficulty, self-efficacy, context-dependency, and subjective norms can be supported. Furthermore, our correlation analyses and additional analyses of variance show that although these factors are related, they represent conceptually different constructs. Based on these results we conclude that the TANT questionnaire is a valid and reliable instrument that can be used to gain insight into why some teachers implement new technology in their teaching, while other teachers might not. This might provide starting points for tailored teacher training. Furthermore, the TANT questionnaire can be used, for example as a pre- and post-test, to evaluate the effects of teacher training programs on teachers’ attitudes towards new technology use.

To answer our second research question (RQ2: To what extent can the TANT questionnaire be used to validly and reliably measure pre- and in-service primary school teachers actual new technology use) we included an additional scale to measure teachers’ actual use of new technology in the TANT questionnaire. Results of our analyses showed that ‘new technology use’ is a clearly distinguishable factor that can be measured reliably. We added this scale because we agree with Scherer et al. (2020) that it is important to explore to what extent attitudinal factors impact teachers actual use of technology. In our study, we conducted regression analyses to explore the impact of each attitudinal factor as an impact on teachers’ actual use of new technology. Although such regression analyses are not enough to claim causality, we found that all factors had a significant impact on teachers’ actual use of new technology, which might indicate that these attitudinal factors are important to consider if we wish to learn what motivates or discourages teachers to use new technology in their teaching.

To answer the third research question: (RQ3: To what extent can the TANT questionnaire be used to explore differences between pre- and in-service primary school teachers’ attitudes towards new technology and their actual use of new technology) we explored the measurement invariance of the attitudinal factors and the actual new technology use scale. The attitudinal factors of the TANT questionnaire demonstrated full configural, metric and scalar invariance for pre- and in-service primary school teachers. This means that scores on the attitudinal factors of the TANT questionnaire from both groups may be compared (Chen 2007). This is valuable, since there are few studies that explore differences in attitude towards using technology between pre- and in-service primary school teachers. However, configural and metric invariance could be established for new technology use if item 5 was excluded, but scalar invariance could not. We therefore conclude that, comparing pre- and in-service teachers’ scores on new technology use should be done with care.

Despite the average to high scores on the attitudinal factors, both pre- and in-service teachers indicated to make very little use of new technology. A possible explanation may be found in the relatively low scores on self-efficacy, which according to our regression analysis was the factor that explained the most variance in new technology use (19.3%).

The low reported use of new technology could also be caused by the way new technology use was measured in this study. The items do not solely reflect teachers’ self-reported use of new technology but include statements about the way new technology is used. For example, the item: ‘How often do you use new technology to get students to collect and analyse data (such as sensors or apps on a smartphone) in your lessons?’ does not only reflect the use of new technology but also how often teachers let their students collect and analyse data. If a teacher does not let their students collect and analyse data (with or without new technology), the score on this item will be low.

Furthermore, other external factors (such as time and resources) might prevent teachers from using new technology (Khanlari 2016; Francom 2020). We aimed to measure teachers’ perceptions regarding such factors with the factor context-dependency. However, the factor analyses revealed cross-loadings and therefore several of the initially developed items were removed. As a result, we only analysed the results of teachers’ views regarding their dependency on training and support. In Appendix A, we propose several new items that might be used to expand the factor context-dependency to include teachers’ views regarding their dependency on other factors.

In this paper, we define ‘new technology’ as: “hardware and software that teachers can use to support and/or enrich their teaching practices. Some examples of hardware are: smartphones, tablets, 3D printers and educational robots (BeeBot, DASH). Software examples are: simulation software, design software, programming software and video-editing software.” However, this definition is broad and context-dependent. The examples of hardware and software we provided might therefore not be suitable to describe ‘new’ technology in other contexts. We therefore urge researchers, who wish to use the TANT questionnaire, to reflect upon the definition we provide and if necessary, adjust the examples of hardware and software to better fit their context.

Furthermore, the current version of the TANT questionnaire is in Dutch. Although we carefully translated the items into English for this article (checked by a native speaker), cross-cultural validation should determine the construct validity of the TANT questionnaire in different countries.

As a next step, we intend to explore whether the TANT questionnaire can be used to identify different groups of teachers, based on their attitudes towards using new technology in teaching. This allows us to identify possible barriers that certain groups of teachers experience or perceive when they implement new technology in their teaching. Identification of such barriers may provide useful starting points for the development of tailored teacher training programs that fit the needs of different groups of teachers.

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

The research described in this paper was funded by TechYourFuture, Centre of Expertise.